简介

TVM是一套端到端的深度学习编译系统。

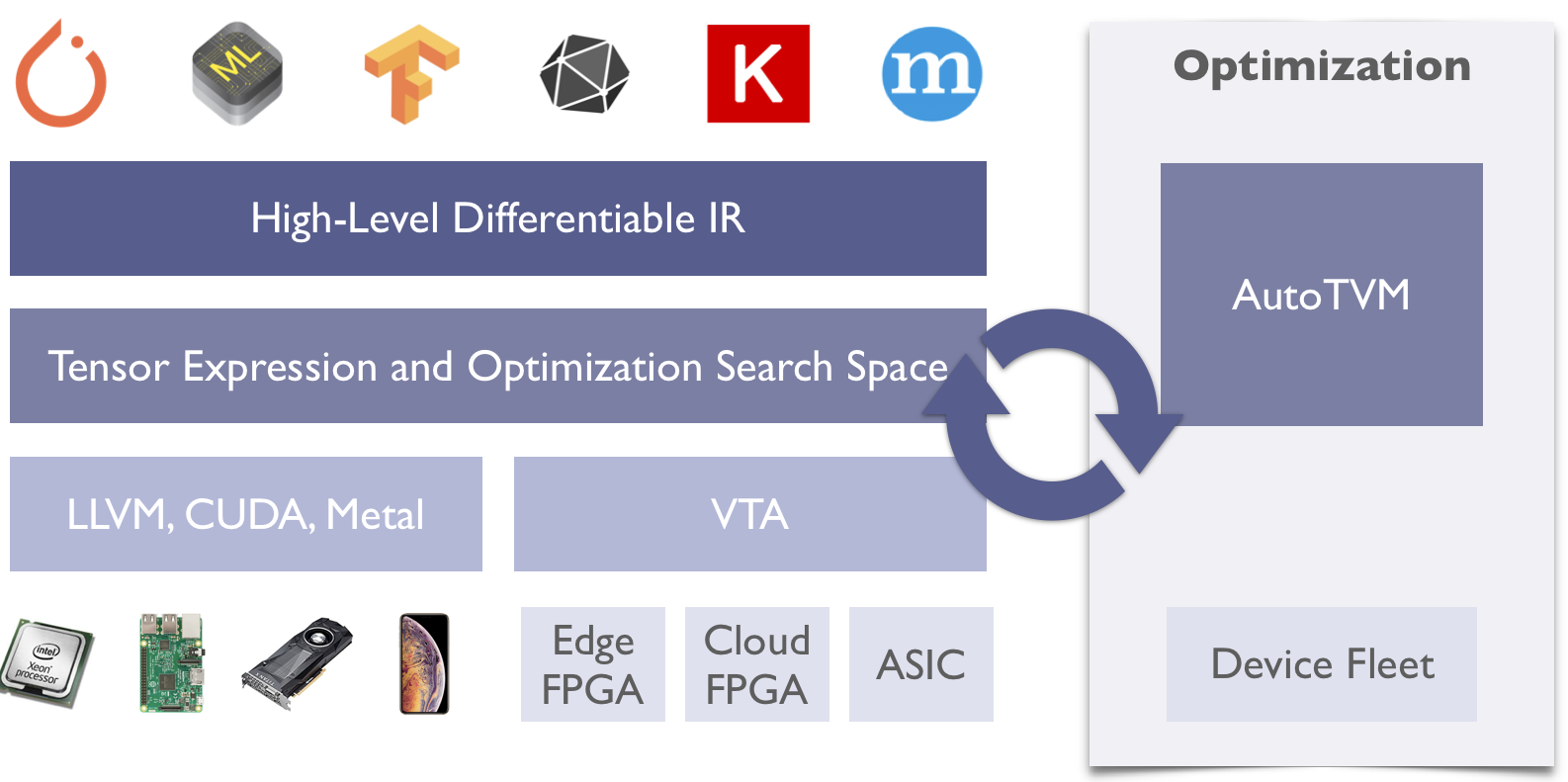

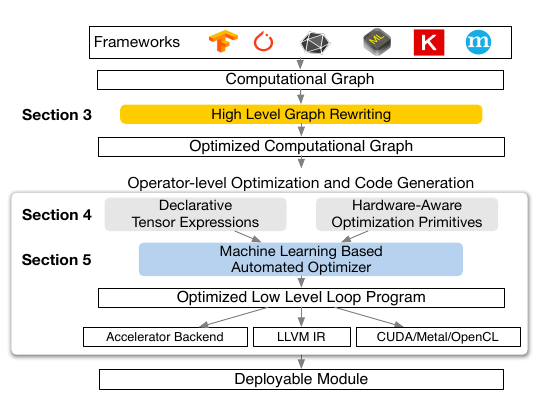

它的主要特性如下图所示。

第一,它支持将多种前端模型( Keras, MXNet, PyTorch, Tensorflow, CoreML, DarkNet等)编译到多种后端硬件上(包括传统的CPU/GPU,还包括FPGA、TPU等专用加速硬件)。

第二,它提供了一整套自动优化基础设施,能够帮助用户快速在一个新的硬件体系下建立起较高的性能。

独到之处

TVM 相对它的前辈如(Halide),有两个创新的点值得关注。

其一:

section4部分引入了一个可扩展的tensor compute primitives (就是硬件支持的用于特定矩阵运算的原语)描述方法。这使得TVM能快速支持新的硬件加速指令。

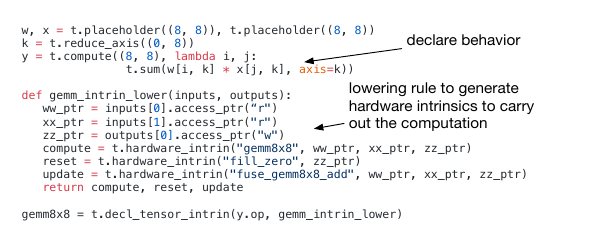

如下图所示。

使用类似RISC的思路,只需要提供少量细粒度的基本步骤,就可以通过组合配置建模出复杂的硬件加速指令。

其二:

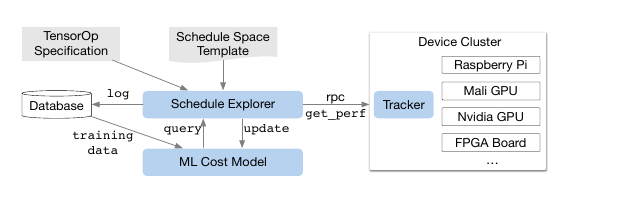

TVM提供的autotuner相当强大。

TVM支持常规的黑盒自动优化,也就是使用黑盒优化算法反复到硬件上运行程序获得性能。

也支持基于预测的自动优化,在这种模式下,TVM根据硬件上获得的性能测试结果,训练出了一个性能预测模型。使用这个预测模型,TVM能实现快速的调优空间探索(论文中的效果比黑盒算法好)。因为模型预测很快,耗时低于1ms,而真实运行测试可能需要多耗费几十倍的时间。并且模型能持续从硬件中学习,而黑盒优化算法每次都必须从头开始。

TVM和其他项目的关系

https://github.com/apache/incubator-tvm/blob/master/docs/faq.rst

和Halide关系

https://github.com/apache/incubator-tvm/issues/682

http://docs.tvmlang.org/faq.html#tvm-s-relation-to-other-ir-dsl-projects answers the difference from existing projects, including Halide. In short, we specifically focus on deep learning, and optimize for multiple hardware backends (GPUs and other accelerators).

The major challenge is to make the schedule space complete enough to cover the state of art kernels for hardware back-ends we want to support, specifically gpu and other hardwares. The second challenge is to build the dsl representation to cover things we care about in deep learning(e.g. recurrence). The other issues include the ease of deployment and interpolation.

These challenges are not well addressed by existing frameworks(including Halide) and requires rethink and design of the stack as opposed to simply reuse an existing one.

You can also find that the TVM’s IR itself is evolving, and we continuously learn new lessons from hand optimization and tuning for various backends.

和MLIR的关系

参考https://discuss.tvm.ai/t/google-lasted-work-mlir-primer/1721/15

中TVM作者的如下回复。

Interpretation of MLIR’s Vision

I think what you answered reflects MLIR’s vision. Make the abstract class of IR and derive dialects. But not necessarily provide specific pass for the dialect, so if X-IR is a dialect of MLIR, then there are dialect specific passes that is needed in the pass.

Polyhedral dialect is a dialect in MLIR. In the current case, the polyhedral IR is part of the mlir codebase, which gives the view of “native”, but non-the-less it is a dialect just like the other automatic optimization dialect. The fact that it is part of the native code base does give an opinionated view of what what automatic optimization should be like in MLIR ecosystem. I think it is still very much an open problem, TVM has done a lot in this direction, and we can collectively innovate on this area.

How TVM can work with MLIR

First of all, MLIR won’t make TVM obsolete. In the contrary, it can help TVM stack by providing insights in IR design and possibly some lowering infrastructure.The community will keep improving our current IR infrastructure toward a better unified TVM-IR infra. We will try to define TVM dialects in MLIR to see if it makes sense to allow bi-directional translation between MLIR and TVM-IR, this way we can take benefit of some of the infra provided by MLIR and make TVM work together with MLIR’s ecosystem.

一些初步的认知

从已有的信息看,针对新的硬件体系或者新的运算逻辑,TVM应该是一个不错的选择。

初步查看的结果,其文档比较丰富,如果使用python接口编程,易用性也不错。

调试

使用O0 -g构建

1 | cmake ../ -DCMAKE_BUILD_TYPE=Debug |

获取各个op的运行时间

只需使用debug_runtime替代普通runtime即可

1 | from tvm.contrib.debugger import debug_runtime as graph_runtime |

具体实现可参考src/runtime/graph/debug/graph_runtime_debug.cc中的RunIndividual函数。其实核心就是对每一个op运行计时。

输出的有价值信息主要包括两类:

a 是在后台输出按执行顺序排列的op运行时间(如果是notebook,这个信息不会出现在浏览器中,会出现在启动notebook的终端)

b 是在notebook中打印按耗时占比排序的op执行时间

获取各个pass执行后的IR

使用git bisect定位问题

在tvm的目录下:

使用git bisect start开始二分查找

然后使用git bisect good $commit_id和git bisect bad ${commit_id}指定搜索区间。

就可以反复使用 cd build;cmake ../ -DCMAKE_BUILD_TYPE=Debug ; make -j 6构建并运行tvm,观察行为是否正常。

如果正常就git bisect good,如果异常就git bisect bad;如果中途某个版本遇到其他问题(例如还有其他bug干扰),可以使用git bisect skip。

找到问题后,使用git bisect reset还原。